vacation

June 27, 2009

Well, dear readers, I’m off for eights weeks of summer vacation. I’ll be heading to Tennessee, Colorado, California, Canada, and Ohio visiting various family and friends. I’m not sure what my internet connection will be like during this period, but I’m guessing it’ll be sketchy. I still hope to do some more intermittent posting now and again, so please keep checking the blog. I’ll be back to normal at the end of August.

Have a great summer.

anticipating wrongdoing

June 27, 2009

Those of you who remember the movie Minority Report, with Tom Cruise, are familiar with the idea of anticipating someone’s future wronging and then taking preventative action against it. It’s an interesting idea, but when it came out in 2002, it was still science fiction. Now, it seems we could be getting closer to something like that with the preliminary (unpublished) results that Vincent Clark or the University of New Mexico at Albuquerque gave a talk at the Organization for Human Brain Mapping conference.

Those of you who remember the movie Minority Report, with Tom Cruise, are familiar with the idea of anticipating someone’s future wronging and then taking preventative action against it. It’s an interesting idea, but when it came out in 2002, it was still science fiction. Now, it seems we could be getting closer to something like that with the preliminary (unpublished) results that Vincent Clark or the University of New Mexico at Albuquerque gave a talk at the Organization for Human Brain Mapping conference.

Clark claims that he can predict which drug addicts will relapse after treatment with 89% accuracy using both traditional psychiatric techniques and fMRI brain imaging. He used 400 subjects in his decade long study. What’s interesting about this approach is that it involves a more serious level of quantitative analysis (from the fMRI) than most psychiatric evaluations and thus would be a more rigorous metric by which to measure patients against a standard.

While determining if patients in treatment will relapse (and thus might need more treatment) is a beneficial evaluation for both society and the patient, it’s not hard to extend this type of test to a more ethically difficult scenario. Suppose someone develops a test that, with 90% accuracy, determines (via MRI or some other such technique) whether a violent offender in prison will commit a repeat act of violence after paroled. I think we’re a way off (if it’s even possible) from such a test, but still, the thought experiment is interesting.

How would our criminal justice system handle such a test? Since the ostensible goal of our penitentiaries is to “reform” those who’ve done wrong, could such a test be used to determine at what point someone’s been “reformed?” How do we balance the idea of reform with the idea of penance, a similarly old but quite different justification for imprisoning someone. How much testing of such a test would we need to actually implement it, since incorrect diagnosis could lead to either additional harm to citizens or wrongful confinement. Is there any (non 100%) level of efficacy that would be acceptable?

It strikes me that implementing a test like this in our criminal justice system would force us to rework a good deal of the philosophy behind locking people up (which I don’t think would be a bad thing). It’s an interesting thought experiment now, but perhaps in a few decades it will become a reality.

optogenetics and brain control

June 24, 2009

Blue light causes the cells to become activated, sending out electrical signals. Yellow light causes the cells to become inactive, blocking any signal propagation though it. (credit: h+ Magazine)

Implanting electrodes in someone’s brain and then shocking them seems somewhat sci-fi to most people, but it’s a medical reality. We’ve found that by electrically stimulating parts of the brain and vagus nerve, we can reduce the effects of epileptic seizures, Parkinsons’, and other disorders.

This whole process sounds incredibly complicated, and it is, but from a larger perspective, it’s quite simplistic. Basically, we just stick wires in peoples’ heads and shock them and see if their symptoms decrease. A slight divergence from this external electrical stimulation is the subfield of optogenetics, coined first by Karl Deisseroth at Stanford. I read a nice summary of his recent work in h+ Magazine and will give you the thumbnail sketch.

Instead of sticking an electrode down into your brain, Deisseroth’s group stuck a fiber optic cable that can deliver different wavelengths of yellow and blue light. Normal neurons are not light sensitive, but the targets of this optical stimulation are genetically modified to be so. Deisseroth’s group squirts in a bit of genetically engineered virus exactly in the brain where they want to stimulate. This virus has two genes culled from algae and archaeon that then reprogram the surrounding neurons to be sensitive to blue and yellow light.

These neurons now become inhibited, unable to produce an electrical signal, when subjected to the yellow light and excited, producing an electrical signal, when subjected to the blue light.

This process, while a good bit more involved than brain stimulation, achieves basically the same thing: getting neurons to fire when we want them to. But it also has then benefit of allowing us to directly block neurons from firing, which we could only somewhat achieve through electrical stimulation by shocking one part of the brain and hoping that it causes another part to go quiet in the way we want. Silencing areas of the brain directing could prove an incredible boon in getting the brain to behave in the way that we want.

I see this optogenetic technology as an additional tool, not necessarily a replacement, to electrical brain stimulation. As our understanding of how the neurons in specific parts of the brain are connected and our technology for controlling those neurons improve, our ability to mediate the many debilitating diseases and conditions that plague us will improve dramatically.

Some of you may be uncomfortable with the idea of messing around “under the hood” of the most complicated machine in the world, but I’d then ask you how direct stimulation (or inhibition) is really any different than the many drugs that target the brain. This technology is simply the next step in our ability to fine tune ourselves.

misguided spaceflight

June 24, 2009

The Obama administration has ordered a review of NASA’s human spaceflight program, the next iteration of which is called Constellation and is planned to take us to back to the moon in 2020 and to Mars around 2030. Budget woes may delay the program, but I question the strategy in returning to the Moon.

To properly approach this issue, I must first explain the entire context of manned spaceflight. In my opinion, it’s a PR campaign. In the Cold War 50s, 60s, and 70s, we used spaceflight to flex our technological muscle. Not only did landing on the moon and the rest of the groundbreaking missions flaunt our scientific and engineering abilities to the world, it also inspired a generation of scientists an engineers here in the U.S. Hell, it still inspires me that we were able to land people on the moon.

In another perspective, one could argue, is that exploring is what we humans do, from Marco Polo to Leif Ericson to Shackleton. Exploring our planetary neighbors is simply the next phase. I’m quite amenable to this idea because I attach our desire to explore to our general quest to make sense of our surroundings.

Pursuing this desire is an important undertaking, but it shouldn’t distract from the rest of space exploration through probes, robots, and telescopes, a project with much more (in my opinion) scientific and philosophical promise. Given the massive cost of sending a human to the moon again (the GAO estimates as high as as $230 billion!), I can’t help but think that we can get more bang for our buck in other cosmological projects.

The ultimate goal for NASA’s Constellation project is putting humans on Mars (and returning them, of course). I this this goal is fruitful and is the natural next step to our space exploration. Going to the moon again simply because we can seems like a waste of time and resources.

When space exploration bleeds into politics and PR (and believe me, NASA’s got plenty to go around), we must be all the more thoughtful about which direction in space we’re going.

faith in AI

June 22, 2009

Namit Arora at 3quarksdaily has a very interesting and thoughtful post about the future (or lack thereof) of true artificial intelligence.

He does a good job at tracing the major phases of AI design, from essentially large databases to the more modern neural networks. He points out that while AIs have become more and more capable of solving well-defined problems (although one could argue we’ve been able to expend the set of solvable well-defined problems a great deal over the years), ultimately they will fail to reach the truly human je ne sais quoi because they are unable to become completely immersed in the human experience of emotions, relationships, and even simple relationships between objects and things in our world. (Arora borrows much of these ideas, which I am only briefly paraphrasing, from Hubert L. Dreyfus who borrows from Heidegger.)

While I agree that we are no where near the singularity, as Ray Kurzweil would have you believe, I disagree that we are no where nearer than when we started in the early days of artificial intelligence (that is, the 60s and 70s).

A big shift in the development of AI, in my opinion, was moving away from the teleological view of intelligence, away from “This is how we think the mind works, so this is how we’re going to program our AI.” The transition from symbolic (brute force) AI to neural networks marks a large shift in that it’s basically an acknowledgement that we programmers don’t know how to solve every problem. Now, what we still know how to do (and must do for now at least) is to define our problems. Thus, if I make an AI to solve a certain problem, I may run it though millions of machine-learning iterations so that it can figure out the best way to solve that problem, but I’m still defining the parameters, the heuristics that make that program determine whether the current technique it’s testing is good or not.

I agree that this approach, while yielding many powerful problem-solving applications, is ultimately doomed. But in pursuing it, we have bootstrapped ourselves into a less well-defined area of AI. If you believe (as I do, although I don’t like the religiously aspects of the word “believe”), that the brain is simply a collection of interconnected cells and nothing else, then in theory we can recreate it in silicone. The problem arises in determining how the cells (nodes in comp sci language) are interconnected. How can we even know where to start?

And here’s where the faith aspect comes in. I’ll call it what it is. As our understanding of the functional aspects of the brain improves (thanks to all the tools of modern technology) as do our computational processing and storage capabilities, I find it hard to think that we will not ultimately get there.

Yes, we will probably need a more philosophical view of what it means to be human and sentient. Yes, it will probably take us a long, long time from now, perhaps even after my lifetime, but remember, the field is incredibly new. I’m heartened by work done by Jeff Krichmar’s group at UC Irvine with neurobots in approaching the idea of intelligence from a non-bounded perspective.

As our technology and understanding of intelligence improves, I simply cannot believe (and here, perhaps, I am using a more religious flavor) that our quest to understand ourselves would allow us to abandon this project.

water vigilantism

June 22, 2009

The U.S. Global Change Research Program recently released a study, which, among many daunting scenarios, projects the change in precipitation between 1961-79 to 2080-99. Needless, to say, there’s going to be a good bit less water falling over the coming years in the the southwest. This oncoming drought threatens both the future of agriculture and domestic and commercial water use in the area.

The politics of water rights in the West are fascinating and far to complicated to describe here. But the idea is that people who were “first in line,” so to speak, to sign up for the right of a certain amount of water from a river or other source get first dibs. What this translates into, though, is that preventing water from going into those natural channels is in effect “stealing” from the large pot that eventually gets divided along various lines.

I might be more sympathetic to this “crime” were it not for the following:

1) the way in which water rights are divided is incredibly complicated and often outdated;

2) it’s much more efficient to capture water on your own property, for your own use, than to let that water flow into natural channels only to have it pumped back to your house or building.

In April, Colorado passed a limited measure allowing people off the water grid to collect their own water for use in watering lawns and gardens. While a step in the the right direction, it’s not clear that other states will follow suit and/or expand water collection rights to those on the water grid.

Many homeowners have taken matters into their own hands regarding water usage, breaking the existing water laws. I support these measures both because I think they’re simply more efficient and because they set the stage for future improvements in water usage.

Some (mainly homeowners) have taken to collecting their roof water in barrels or cisterns. Others recycle greywater from washing machines, sinks, and showers (note, not toilets or sinks with garbage disposals) back into their yards. Allowing this kind of intelligent recycling promotes conservation on a local level as well as prevents us from wasting potable, treated water for things that don’t need it.

What’s more, in these two systems, the water not sucked up by the plants simply goes back into the natural aquifers.

We need to move to a more efficient and smart way of using this ever-dwindling resource in the West. Everyone acknowledges water shortage is a large problem, which may explain why many authorities sometimes look the other way over these types of infractions. When enough people embrace a technology or behavior that aims to ameliorate a serious problem, it becomes acceptable and can then become law.

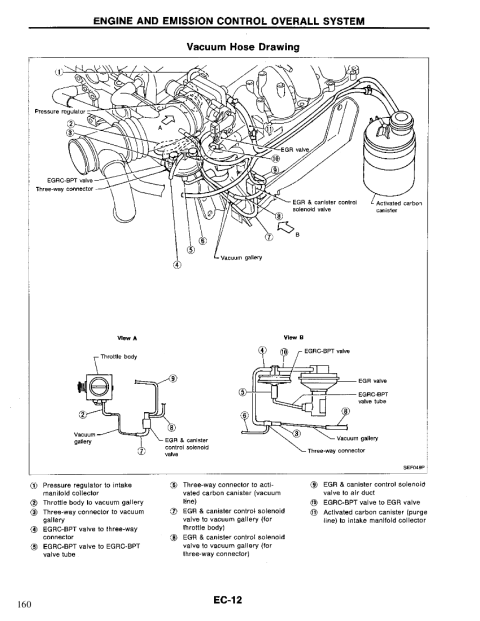

cars are pretty complicated

June 19, 2009

I’ve been working on my car (’95 Infinity G20) recently and really am only beginning to appreciate how complicated and well-engineered it is. I think most of us take for granted that they’re complicated, a view certainly confirmed by looking under the hood. The interesting thing, though, is that every aspect of that complication is engineered in a very precise way. The thought that a team of people (or more like many teams over the years) sat down and designed every tiny part, every bend in the hose, every connection and bolt, really just boggles my mind.

Cars pale in comparison in complexity to something like a 747, an aircraft carrier, an industrial park, or a nuclear reactor, but they’re still incredible feats of engineering. We take so much of this complexity and mindful engineering for granted that it’s sometimes nice to step back and say, “You know, we humans are pretty damn clever.”

simple, cheap, scaleable solar

June 18, 2009

This news actually broke about a year ago, but I doubt many of you saw it.

A few MIT students took a class on solar concentrators and then built one. The main advantage of this design is that it’s cheap, simple, and fairly easy to build while still being incredibly powerful. It concentrates the sun’s light roughly a thousand-fold at the focal point, enough to cause a two by four too spontaneously combust. The short descriptive video is well worth the watch.

Some of the students founded a startup, RawSolar to take the idea to the marketplace.

The current models produces steam, which can be used in a number of ways, including generating electricity. Another idea (posted as a YouTube comment, actually) is to put a thermal-electric resonator at the focal point and generate straight up electricity from the concentrated electromagnetic radiation of the sun (similar in function but different in design from a photovoltaic cell).

It’s a tough climate to be starting a company, but I really hope these guys at RawSolar make it. In a time where there’s so much competing information and technology, disagreement about the expense and scalability of clean energy with and without subsidies, this device is simple, efficient, and cheap. It represents a democracy of innovation as well. It wasn’t some big VC-funded company the built this. It was a handful of MIT kids in a class. Successes like this one make the solutions to our energy crisis seem (naively) simple. But given the generally depressing climate of energy policy and the ever-looming specter of global warming, I can do with a bit of naive optimism.

To succeed, they probably will need some VC funding to get off the ground. They’ve got some smart people on their team, so hopefully they’ll make it to production. If (When) they do start cranking these out, though, I want to get one for my roof.

21st century shop class

June 17, 2009

A report (summary, handy comparison tool) put out today by the American Institute for Research describes the state of American Math proficiency in fourth and eight grades compared to many other countries.

The gist, surprise surprise, is that the U.S. lags a good bit behind Asian countries like Japan, Taiwan, South Korea, Singapore, and Hong Kong. Many have called for the U.S. to reinvigorate its math and science education program so that we can churn out more scientists and engineers to keep up with everyone else in the market of ideas, and ideas about for how to do this.

I won’t advocate for or against any of these proposals, but instead will throw one of my own ideas into the ring.

If we’re going to throw some money at the dearth of kids going into science and engineering careers, let’s start by making science and engineering more than just intellectually interesting. I think the percentage of kids who like building stuff and tinkering is far larger than the percentage who eventually decide they like the strictly academic aspects of these fields. Unfortunately, many of those who don’t make it into the field by high school fall off because “they’re not good at math,” or they find it “boring” or “not relevant to their lives.” Ultimately, to make it into science and engineering fields, you must overcome these obstacles, but we can give them a good bit of the kinetic energy required to overcome those hills. Simply, we make them like the actual practice of science and engineering and worry about the theory later.

(You’ll notice that I’ve seemingly forgotten about math, but while the mathematicians usually have a different philosophy than scientists and engineers, I think we can also overcome the barriers that middle and high school math poses in a similar way as the science barriers through my proposal).

What ever happened to shop class, where you build birdhouses out of wood and maybe even get to do some metal working? I haven’t heard of its still existing in many schools (public or private) these days, and its disappearance makes sense given ever-tightening budgets and ever-escalating safety concerns (how many people trust an eight grader with a bandsaw?). While making birdhouses and the like can be quite enjoyable, we should extend the class to encompass a much broader spectrum of the sciences.

We need a new kind of shop class (or, even better, middle through high school curriculum) where the students work on well-defined projects that given them latitude to be creative and to take initiative. These projects can be things like customizing bicycles, making trebuchets, creating robots to do small tasks, developing simple wind turbines and fuel cells and solar arrays, taking apart a car engine, building an electric motor. The possibilities are many and quite scalable in complexity and knowledge prerequisites. In sixth grade, the students can build and program simple robots using the Lego Mindstorms kits, and in twelfth grade they can build them using breadboards, servos, and real programming. Students could construct a composing chamber where they introduce different types of organisms, test the chemical properties of the chamber, and monitor its progress over time. Sites like Make Magazine and Instructables are full of projects like this.

Projects can run the gamut from biology, chemistry, physics and environmental sciences. The main key, though, is that they must be self-directed and involve actually building things. They can learn the practical knowledge necessary along the way. Self-directed learning — another important trait of scientists and engineers. The teacher would take a very hands-off approach, walking around providing little bits of guidance here and there but mostly staying out of the way.

I’ve seen classes set up like this. They’re art classes and are often incredibly effective. Students come in on their free periods because it’s relaxing, almost, to work without direct teacher involvement and produce something they’re proud of.

Of course, in order to run a class like this, it takes a teacher who is energetic and motivated. Anyone can teach science from a textbook.

A class like this could be an elective, a supplement, because I doubt many schools will want to give up on the “hard sciences.” It should be an elective, for the main goal is to give kids the motivation they need to make it through the more academic parts of the disciplines.

I don’t fool myself that many schools will adopt a class like this, or that it will become any kind of national initiative, but when I’m back teaching in a high school, I’ll do whatever it takes to get a class like this underway.

why bother with censorship?

June 17, 2009

If you’ve been paying any attention to the news over the last two days, you’ll undoubtedly have heard breathy congratulation for how the protesters in Iran have used new media like Twitter and Facebook to circumvent the Iranian government’s shutdown of SMS (text) messageing, BBC Persia, and (briefly before the election) Facebook.

You probably also have heard that China’s patchwork internet firewall and monitoring system is fairly easy to circumvent via a simple proxy-server. Here’s a post on how to do just that.

On a more personal note, the high school where I recently finished teaching had web filtering software to block pornography sites, Facebook, and other “non academic” sites. Having proctored the computer lab many, many times, I can tell you that the students have easily figured out how to get around all of these restrictions.

Clearly, the efforts of Iran and China (and possibly others I don’t know about) to “protect” its citizens from some areas or avenues of information don’t work terribly well. Thanks mostly to the internet, stopping communication when it really wants to happen is like the levees during Katrina trying to hold back Late Pontchartrain.

Sure, these “protective” measures do work to some extent. Some say that today’s (Tuesday) information coming out of Iran is far less than that of yesterday due to an increase in Iran’s restrictive measures. Undoubtedly, China’s firewall does hinder some people’s access to information.

Nevertheless, if you’re a government launching a censorship campaign, you better be damn sure it’s pretty airtight, since the stigma of censorship in the eyes of Western countries is pretty high, and it foments frustration in its own (younger, more tech-savy) population. Short of banning the internet outright, I can’t really see how a government could effectively stifle subversive organization and/or access to information.

If I were a repressive state, I’d give up on censorship and go instead for (non-state run) propaganda and controlling the apparatus of education. Iran and China (and others) also employ this form of opinion molding and would do well to concentrate their efforts in this area, which is a bit harder for an outside to show is rigged.

As the internet becomes even more the dominant form of communication, our global interconnectedness will only increase in the future. At some point, these governments must realize that restricting this access to information only undermines their credibility and viability both within and without their borders. It will be interesting to see how long it takes them to figure this fact out.